Cognitive science and big data analytics as applied to future network

Current network management and control is largely quasi-static. The quasi-static approach worked reasonably well up to now due to statistical multiplexing of bursty traffic and the network management and control system only needs to react to average traffic changes. As such, the network management and control can afford to be and are rather inefficient in terms of network parameter sensing and control. Within the next decade, there will be more statistical multiplexing but the changes will not be slower. There are a number of factors that will make the network much more dynamic and bring the problem of managing and controlling a fast dynamic network to the forefront as a significant challenge to be overcome. The nature of these new dynamics and properties can be divided into two groups though they are intimately related:

Exogenous traffic statistics and service requirements (list not exhaustive)

- Wideband sensor (e.g. optical imagery) data relay to storage and processing centers.

- Large transactions (TB) flows generated by big data analytics.

- Critical time deadline services such as fast trading, early warning, blue force tracking and situation awareness in challenged areas.

- Real time sensing, correlation and data analytics of massive and global-extent computer and network data for cyber defense.

- Potential of ~50 billion data sources and sinks when Internet of Things is fully deployed

Network granularity and dynamics (list not exhaustive)

- Core optical network will use end-to-end flows without buffering at intermediate nodes pushing congestion control to the network edge and scheduling.

- Transaction size (elephants) can fill up the granularity of a single wavelength at the edge and in the core for seconds to minutes requiring fast per session scheduling and network reconfiguration.

- Software defined networking (which is rather static today compared to sub-second time scales) need to create a new paradigm for congestion control and recovery from failure.

- Merging of architectures of access wireless and optical networks necessitates the joint management and control of both networks at per session rates.

Many proposals for management and control of dynamic networks today especially in the SDN area and the hybrid wireless/optical access network area, most if not all, have yet to address the massive scalability at speeds problem adequately. This daunting problem will not be solved without new modeling and creation of insightful techniques to convert a hitherto sloppy and sleepy discipline into a rigorous science.

Examples and the suggestion of the utility of more scientific models and techniques

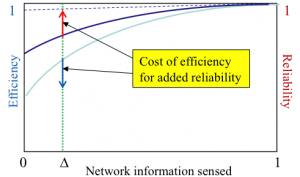

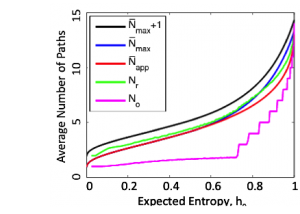

To illustrate the scalability problem of large scale networks, Fig.1(a), [3], notionally suggests the fraction of network information sensed versus achievable network efficiency and reliability. Currently the exact shape of this curve is not known, albeit scattered points based on ad hoc (usually sensible) algorithms, exist but with unknown optimality. If a quantitative scientific approach of network management and control is created, we can determine what network information is sensed and at what amount. A specific example is described in [1], establishing large flows over access and wide area networks, sending multiple probes down candidate paths the availability of which is periodically updated by the control plane to find open connections. Complete broadcast of updates of all flow states in a dynamic wide area network of the future will be of the order of hundreds of Gbps, making such large scale per flow switching almost impossible. In [1], we proposed a scheme with less frequent state updates but uses a highly collapsed scaler which is the sampled entropy of the network to help pick candidate paths. A reduction of 3 orders of state information broadcast is achieved at the expense of ~50% increase of paths probed which is manageable, Fig.1(b). In [3], we use only compressed state information of subnets to find routes that can probabilistically guarantee message delivery delay. In [5], we derived the Information Theoretic tight lower bound of the minimum effort required to completely determine a fiber network state and found an algorithm that achieve the bound. That example, though idealized, suggests the utility of a rigorous scientific treatment of network management and control.

(a) (b)

Fig. 1 (a) Trade-off of fraction of network information sensed vs network efficiency and reliability; (b) Required number of paths probed with per-flow sensing vs collapsed entropy sensing. The bottom curve has all-state information and the top group only has sampled entropy and stale state information.

Stochastic dynamic model of network management and control

A new stochastic model of the network is needed. This new model will use all existing tools and perhaps requires development of new tools. Many previous attempts to model the network as classical control systems have limited success. This is due to the fact that the Internet has properties that are not usually addressed in classical control theory, besides being very nonlinear (with less control theory solutions). It is imperative that future networks make use of cognitive techniques and big data analytics for its management and control. The following are distinct and significant properties of the Internet and future networks that are not well treated quantitatively by current tools and techniques:

- The exogenous stochastic offered traffic changes the internal states of the network creating a complex system that depends on external traffic and has not been well modeled.

- Large bursty data flows associated with big data analytics requires network management and control time scale ~4 orders of magnitude faster (~mS) than current networks.

- Random delays in many feedback mechanisms such as TCP responses and input queue buffer overflow, are not well modeled and make performance optimization very difficult.

- The “space” of all possible network architecture to optimize on, is vast and hard to characterize parametrically. In particular the set of possible constructs of the Physical Layer and the Data Link Control Layers is highly technology dependent.

- There are many heterogeneous network with disparate technologies and upper Layer architectures. Internetworking with efficiency has been and will be a big problem especially for networks such as wireless and optical access networks must be jointly optimized, [3].

- Time critical applications was previously supported poorly/inefficiently by over provisioning and reduced reliability.

Research Frontiers

There are four fundamental entities (system construct/variables/parameters) in a network that are strongly interacting:

- the set of technology building blocks,

- the space of possible architectures,

- network observables related to the state of the network, and

- quality of service metric of the end users.

The set of available technology and known algorithms and other higher layer techniques generate the space of network architectures. Sensing of the network observables is a key component of network management and control.

References

- Zhang Lei and Vincent Chan, “Scalable Fast Scheduling for Optical Flow Switching Using Sampled Entropy and Mutual Information Broadcast,” IEEE/OSA Journal of Optical Communications and Networks,” April 2014.

- Vincent W. S. Chan, “Optical Flow Switching Networks,” Invited Paper, Special Issue, Proceeding of IEEE, March 2012.

- John Chapin and Vincent Chan, “Architecture For Future Heterogeneous, Survivable Tactical Internet in Area Access Area Denial Environment,” classified and “Architecture Concepts For A Future Heterogeneous, Survivable Tactical Internet,” IEEE Milcom Nov., 2013

- Matthew Carey, Vincent Chan, “Probabilistically Guaranteed Internetworking Service Architecture for Transporting Mission-Critical Data Over Heterogeneous Subnetworks,” Milcom 2015.

- Wen, V.W.S. Chan, L. Zheng, “Efficient fault-diagnosis algorithms for all-optical WDM networks with probabilistic link failures,” Journal of Lightwave Technology, Volume 23, Issue 10, pp. 3358 – 3371, Oct. 2005.

- Zhang Lei and Vincent W.S. Chan, “Joint Architecture of Data and Control Planes for Optical Flow Switched Networks,” IEEE ICC June 2014 Sydney Australia.